Welcome to EzEpoch BETA

Choose how you'd like to start your AI training project

Name Your Project

Give your training setup a descriptive name

🎯 Project Name

Choose a name that describes your training configuration

💡 Suggestions:

Data Processing - New Project

Upload, clean, and prepare your training data

📤 Upload Training Data

Select your training data files (.json, .jsonl, .txt, .csv)

Drag & drop files here

or

Supported formats: .json, .jsonl, .txt, .csv, .tsv ❓

Skip Data Upload

Have a large dataset or prefer to add data later?

Create a package without uploading data now - you'll add your data files to the package after download.

How does this work?

1. Skip to Training Setup →

2. Configure your model and settings →

3. Download package with empty data/ folder →

4. Add your training files to the data/ folder before training

✅ Perfect for large datasets (>1GB)

✅ Saves upload time

✅ Full instructions included in package

📁 Previously Uploaded Data

Select from your previously uploaded files or upload new ones above

Smart Data Optimization

After upload, use Data Analysis & Cleaning to optimize your files for training performance.

- Detects and fixes content issues

- Removes short, unhelpful entries

- Splits overly long content into manageable chunks

- Preserves question-answer structure for training

📤 Uploading to Cloud Storage

Uploading your files securely to cloud storage

🧹 Data Cleaning

Automatic data cleaning and quality assessment

❓Upload data to see cleaning results

🖼️ Link Images (Optional)

For multimodal training, link images to your text data

❓✅ Data Review

Review your processed data before training setup

Process data to see summary

Training Setup - New Project

Configure your AI model and training parameters

All parameters are automatically optimized for your selected model and GPU. Simply choose your model and GPU - the system handles batch size, memory optimization, and compatibility automatically!

🎯 Project Name

Give your training project a descriptive name

🤖 Model Selection

Choose your AI model and training type

🖥️ GPU Configuration

Configure GPU settings and memory optimization

⚙️ Training Parameters

Configure core training settings

- Sorts data by difficulty (easy → hard)

- Model learns basics first, then complex patterns

- 20-40% better convergence & accuracy

- Prevents early overfitting

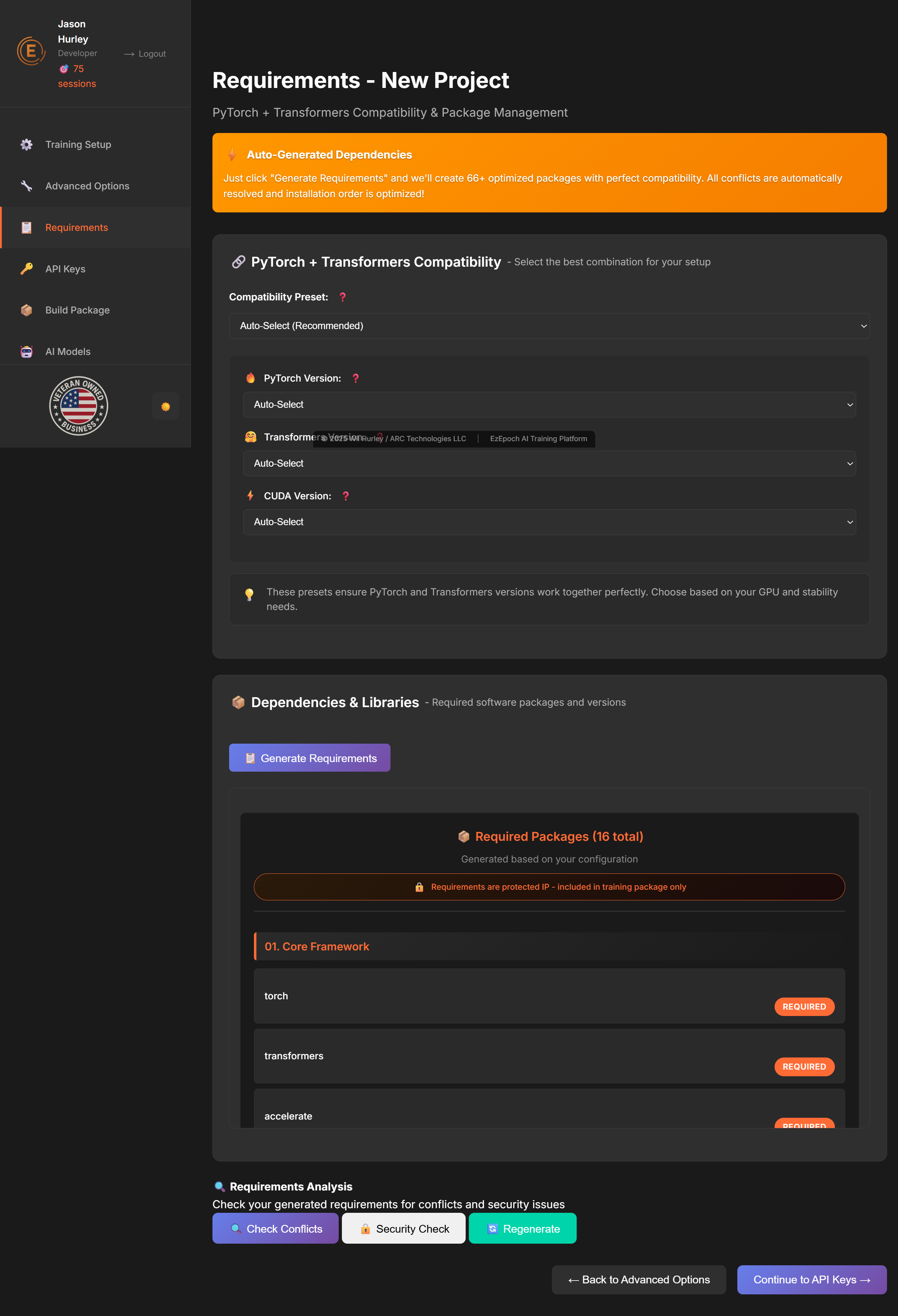

Requirements - New Project

PyTorch + Transformers Compatibility & Package Management

Just click "Generate Requirements" and we'll create 66+ optimized packages with perfect compatibility. All conflicts are automatically resolved and installation order is optimized!

🔗 PyTorch + Transformers Compatibility

Select the best combination for your setup

🔧 Individual Version Selection

Select specific versions or enable Auto to let the system choose compatible versions

📦 Dependencies & Libraries

Required software packages and versions

Click "Generate Requirements" to create your dependency list.

Advanced Options - New Project

Fine-tune your training with advanced parameters

All settings are pre-optimized for your chosen AI model and GPU. You can proceed through the entire process with these defaults for excellent results, or customize any parameter below for specific needs.

🚀 AI Advanced Setup

Let AI optimize your training parameters automatically

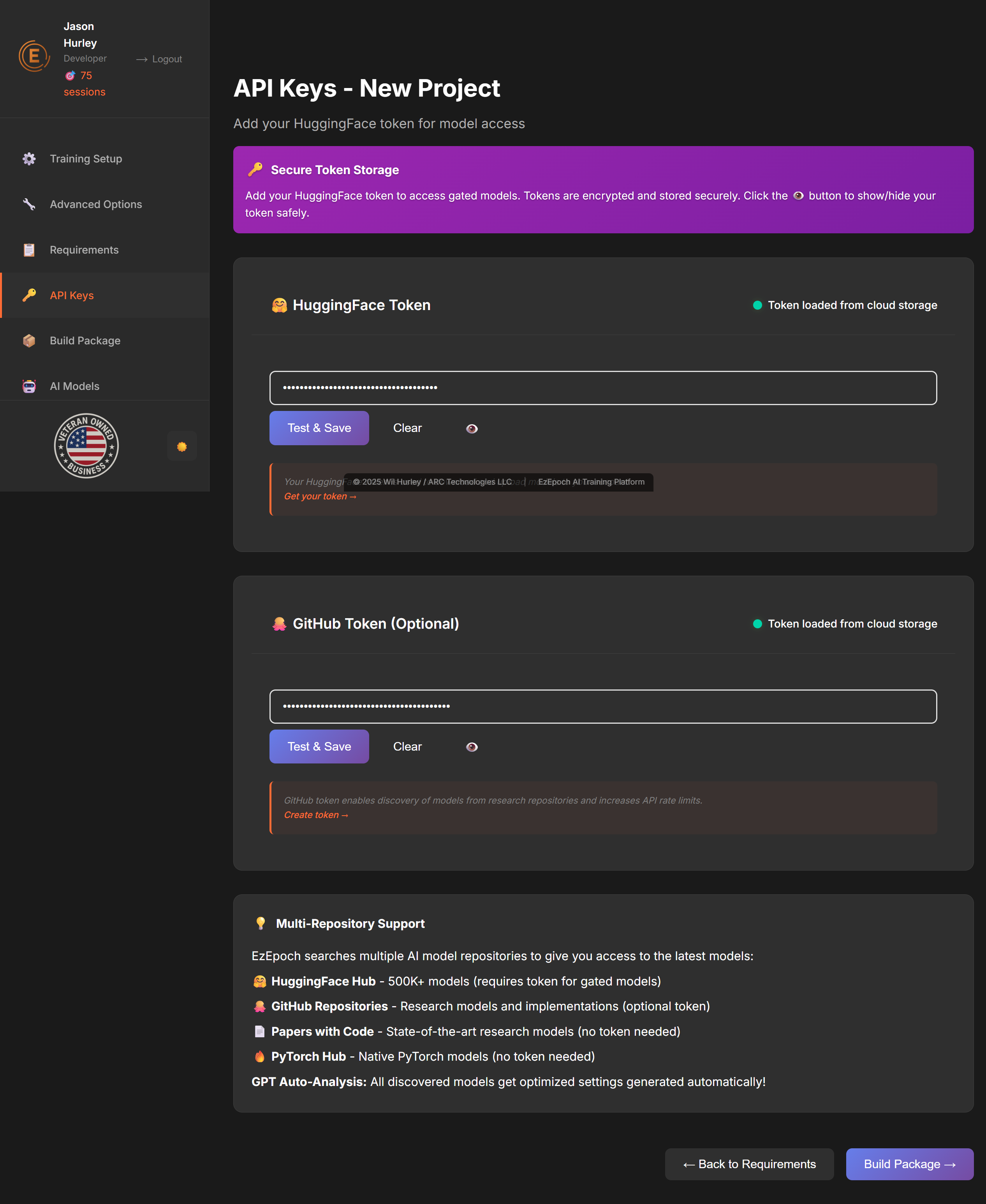

Model Access - New Project

Add your HuggingFace & GitHub tokens for model access

Add your HuggingFace token to access gated models. Tokens are encrypted and stored securely. Click the 👁️ button to show/hide your token safely.

🤗 HuggingFace Token

Your HuggingFace token is used to download and upload models to your account.

Get your token →🐙 GitHub Token (Optional)

GitHub token enables discovery of models from research repositories and increases API rate limits.

Create token →Multi-Repository Support

EzEpoch searches multiple AI model repositories to give you access to the latest models:

- 🤗 HuggingFace Hub - 500K+ models (requires token for gated models)

- 🐙 GitHub Repositories - Research models and implementations (optional token)

- 📄 Papers with Code - State-of-the-art research models (no token needed)

- 🔥 PyTorch Hub - Native PyTorch models (no token needed)

GPT Auto-Analysis: All discovered models get optimized settings generated automatically!

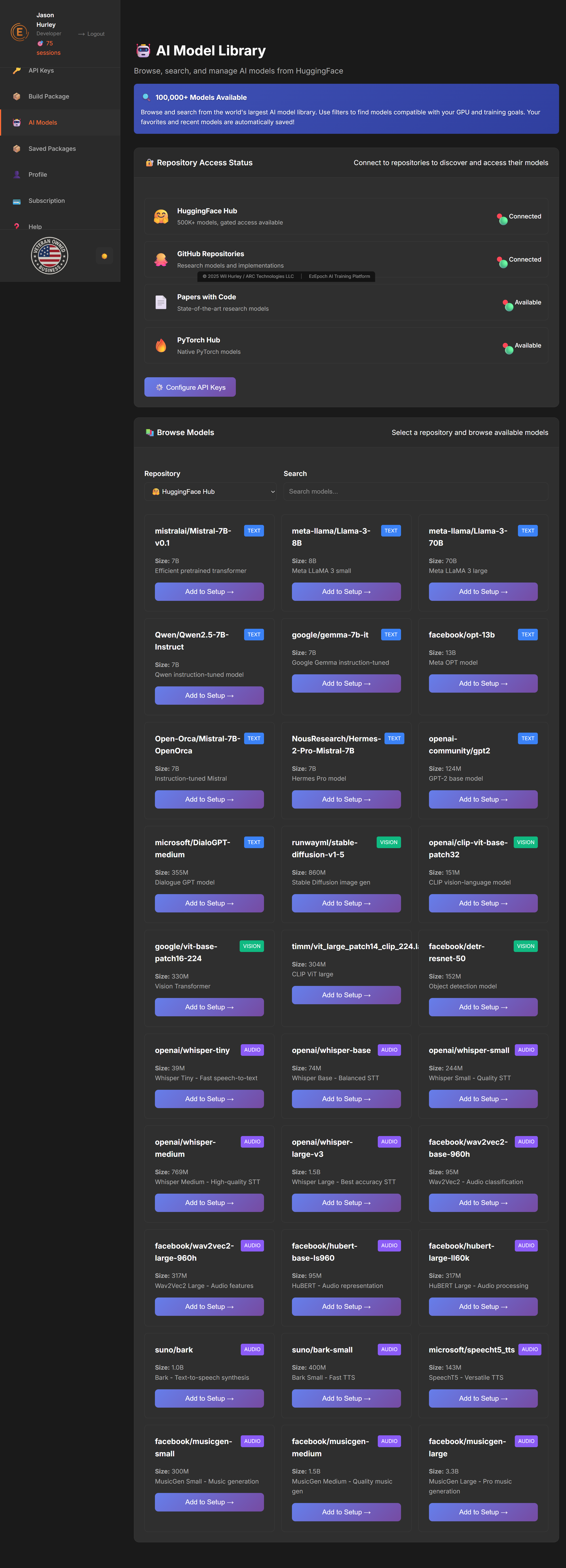

🤖 AI Model Library

Browse, search, and manage AI models from HuggingFace

Browse and search from the world's largest AI model library. Use filters to find models compatible with your GPU and training goals. Your favorites and recent models are automatically saved!

🔐 Repository Access Status

Connect to repositories to discover and access their models

HuggingFace Hub

500K+ models, gated access available

GitHub Repositories

Research models and implementations

Papers with Code

State-of-the-art research models

PyTorch Hub

Native PyTorch models

📚 Browse Models

Select a repository and browse available models

Select a repository to view available models...

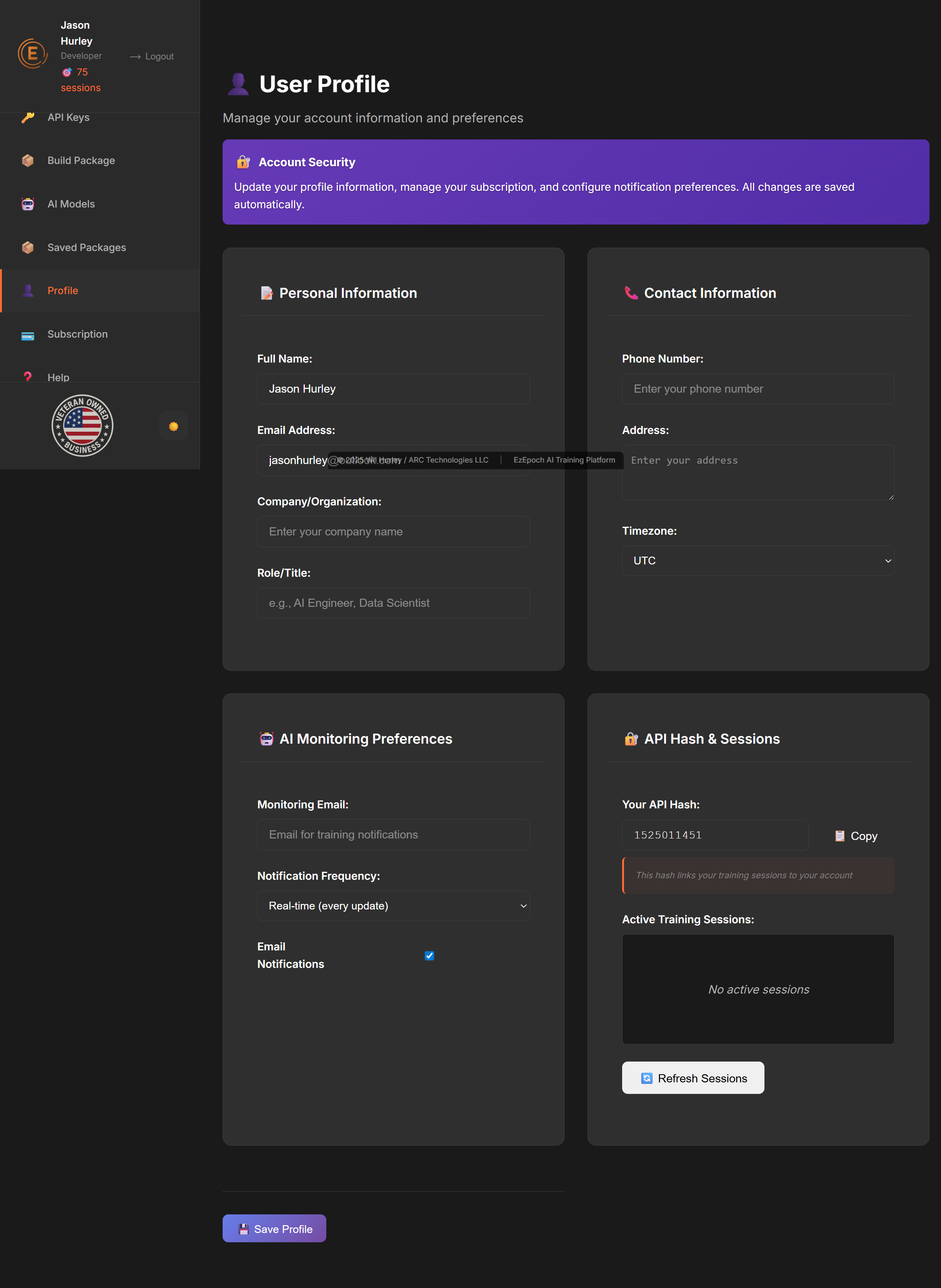

👤 User Profile

Manage your account information and preferences

Update your profile information, manage your subscription, and configure notification preferences. All changes are saved automatically.

📝 Personal Information

📞 Contact Information

🤖 AI Monitoring Preferences

🔐 API Hash & Sessions

No active sessions

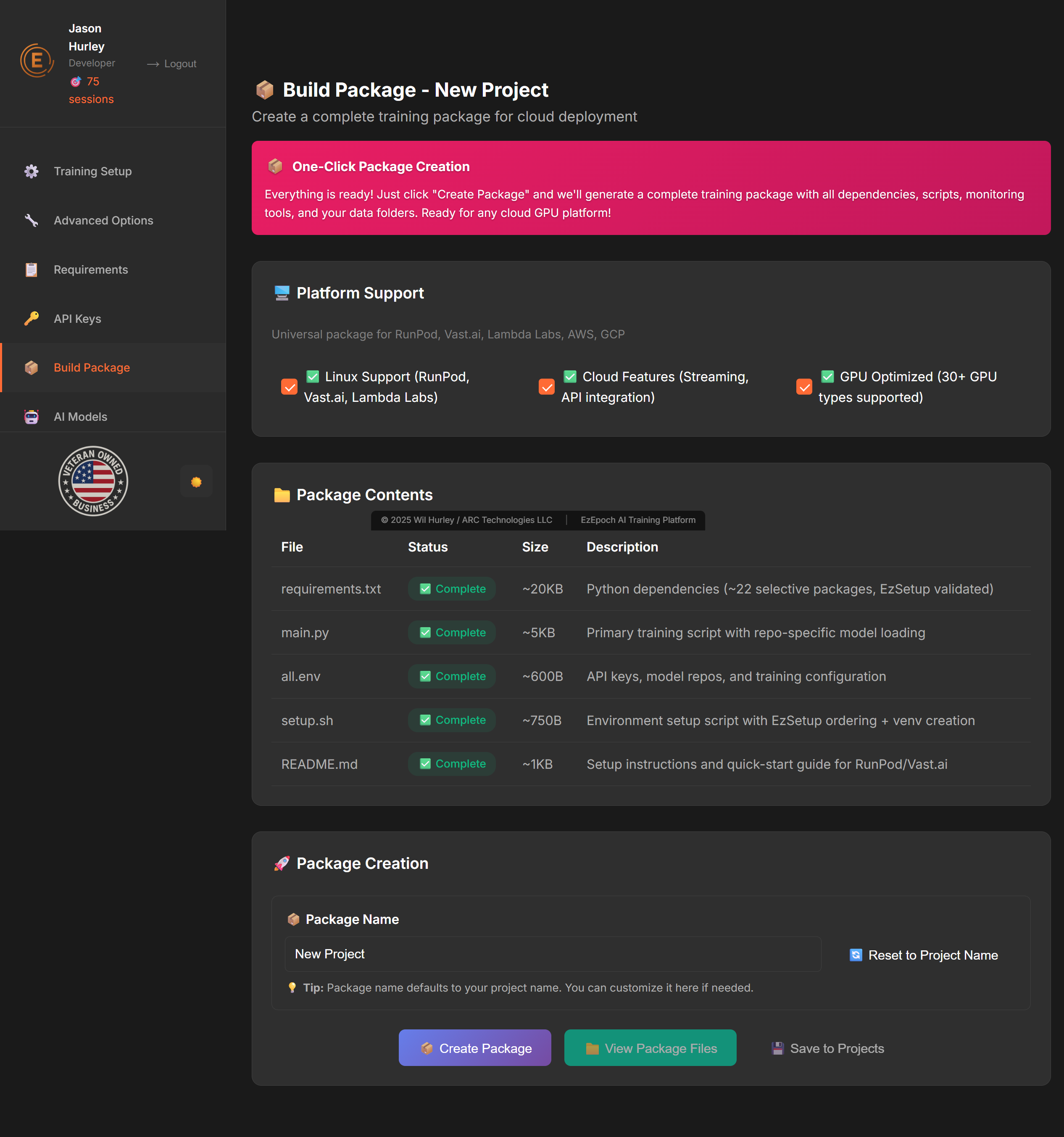

📦 Build Package - New Project

Create a complete training package for cloud deployment

Everything is ready! Just click "Create Package" and we'll generate a complete training package with all dependencies, scripts, monitoring tools, and your data folders. Ready for any cloud GPU platform!

🖥️ Platform Support

Universal package for RunPod, Vast.ai, Lambda Labs, AWS, GCP

📁 Package Contents

| File | Status | Size | Description |

|---|---|---|---|

| requirements.txt | ⏳ Pending | ~20KB | Python dependencies (~22 selective packages, EzSetup validated) |

| main.py | ✅ Complete | ~5KB | Primary training script with repo-specific model loading |

| all.env | ✅ Complete | ~600B | API keys, model repos, and training configuration |

| setup.sh | ✅ Complete | ~750B | Environment setup script with EzSetup ordering + venv creation |

| README.md | ✅ Complete | ~1KB | Setup instructions and quick-start guide for RunPod/Vast.ai |

🚀 Package Creation

☁️ Cloud GPU Deployment - New Project

Deploy your training job to Vast.ai or RunPod with one click

Connect your Vast.ai or RunPod account, browse available GPUs, and deploy your training package directly to the cloud.

🔑 Cloud Provider API Keys

📦 Recent Packages (Last 10)

💡 Your last 10 packages will appear here

Build packages in the "Build Package" tab, then click Refresh to see them here

🖥️ Available GPUs

📋 Your Requirements (from Setup tab)

💰 Sorted by lowest price — Best deals first! (Uncheck "Match Requirements" to see all options)

Connect your API keys above to browse available GPUs

📊 Training Dashboard

Monitor your active training sessions in real-time

📁 Your Training Sessions

💳 Subscription & Billing

Manage your plan, sessions, and billing

Pay only when training actually starts, not during package creation. Automatic refunds for failures under 15 minutes. Sessions rollover at 50% for paid plans.

📊 Current Plan

📈 Recent Training Activity

Your recent training sessions and resource usage

🧮 How Sessions Work - Model Size Matters

Larger models require more GPT monitoring calls, so they use more sessions

0-20B Models

Mistral 7B, Llama 3.2 8B, Qwen 14B, etc.

21-70B Models

Llama 3.1 70B, Falcon 40B, Mixtral 8x7B, etc.

71-200B Models

Llama 3.2 90B, BLOOM 176B, Falcon 180B, etc.

200B+ Models

Llama 3.1 405B, GPT-3 scale models, etc.

💡 Why Session Multipliers?

Larger models generate more training logs and require more frequent GPT analysis (every 100 steps). A 7B model might train for 4 hours, while a 200B model can run for 40+ hours. More monitoring = more GPT API costs = more sessions used. This keeps pricing fair and aligned with actual resource usage.

📊 Example Calculations:

- Starter Plan (5 sessions): Train 5x 7B models OR 2x 70B models OR 1x 200B model

- Developer Plan (20 sessions): Train 20x 7B models OR 10x 70B models OR 5x 200B models

- Professional Plan (50 sessions): Train 50x 7B models OR 25x 70B models OR 12x 200B models

🚀 Available Plans

Choose the plan that fits your training needs

🛒 Add-On Products & One-Time Purchases

Enhance your workflow with standalone tools or buy sessions as needed

🛡️ AI Training Insurance - Zero-Loss Guarantee

We turn 40% industry failure rates into 99% success rates. Guaranteed.

✅ Our 99% Success Guarantee

Use EzEpoch's recommended settings and let our AI Guardian monitor your training. If it fails, we refund you. Period.

✅ Covered - Full Refund

AI Guardian Failed

Used recommended settings but training crashed and our AI didn't recover it? Full refund.

Setup Configuration Errors

Package won't create, requirements wrong, or settings miscalculated? Full refund.

Platform Errors

Bootstrap auth fails, dashboard doesn't connect, or monitoring stops working? Full refund.

First Training Extended Protection

Your first training gets extra protection (30 minutes) while you learn the platform.

❌ Not Covered - No Automatic Refund

Custom Settings Override

Changed batch size, learning rate, or other settings manually? You declined our AI protection.

Data Quality Issues

Training failed due to corrupted data, wrong format, or insufficient data? That's your data, not our platform.

GPU Provider Issues

RunPod crashed? Vast.ai down? GPU out of memory with your custom data? That's between you and your GPU provider.

User Stopped Training

You clicked stop/pause and decided not to continue? That's not a platform failure.

💡 We Want You To Succeed!

🔄 Crash Recovery: Our AI Guardian automatically analyzes crashes and restarts training with corrected settings. Resumes from last checkpoint - no progress lost!

🔁 Unlimited Restarts: Each session includes unlimited restart attempts. Training keeps going until YOU succeed.

🎯 Expert Support: Stuck? Our team will help debug your setup, review your settings, and guide you to success.

📊 Dashboard Control: Monitor live metrics, adjust settings on-the-fly, and let GPT optimize every 100 steps automatically.

🦶 So Easy, Bigfoot Can Do It!

Industry Standard: 40% of AI training jobs fail due to configuration errors, memory issues, and instability.

With EzEpoch: 99% success rate because our AI calculates perfect settings, monitors every 100 steps, and auto-recovers from crashes.

Think of it like insurance: We insure your SETUP and MONITORING. You provide the GPU and data. Follow our recommended settings → We guarantee success. Override our settings → You're on your own (but we'll still help!).

💰 Request Refund

Training failed using our recommended settings? Request your refund below.

ℹ️ Refund Requirements

- Must have used EzEpoch's recommended settings (no custom overrides)

- Platform error must be verified (setup, monitoring, or dashboard failure)

- GPU provider issues are not covered (contact your GPU provider)

- Data quality issues are not covered (we'll help you fix your data)

- First training gets extended grace period automatically

📚 Complete EzEpoch Guide

Everything you need to know about AI training with smart recommendations

🚀 Quick Start - 5 Minutes to Training

Follow these steps to create your first training package

1️⃣ Choose Your Model

Select from 100+ pre-configured AI models. The system automatically detects model size and requirements.

2️⃣ Select GPU

Look for ⭐ stars - these GPUs can fully train your model!

3️⃣ Configure Training

The Advanced tab automatically selects optimal settings. Change precision, optimizer, or training method if needed.

4️⃣ Generate Package

Click "Create Package" - system validates everything, resolves conflicts, and creates a ready-to-run training package!

⭐ GPU Star System

Instantly see which GPUs can handle your model

How It Works

When you select a model, the system calculates memory requirements for full fine-tuning with AdamW optimizer (the industry standard). GPUs with enough memory get a ⭐ star!

✅ With Star (⭐)

This GPU has enough memory to fully train your model with any optimizer and settings. Full control!

ℹ️ Without Star

GPU needs LoRA or QLoRA for this model. You'll see a message with recommendations and alternative GPUs.

Example: Mistral 7B

⭐ H100 (80GB) ← Can fully train!

⭐ A100 (80GB) ← Can fully train!

L40S (48GB) ← LoRA/QLoRA recommended

RTX 4090 (24GB) ← LoRA/QLoRA recommended

The star system is based on full fine-tuning. LoRA/QLoRA can work on smaller GPUs with 90-95% of full training quality! Don't be afraid to use them.

🧠 Smart Optimizer System

Automatic compatibility checking and recommendations

What It Does

The Smart Optimizer analyzes your model + GPU combination and:

- ✅ Enables optimizers that will work

- ❌ Greys out optimizers that won't fit in memory

- ⭐ Recommends the best optimizer for your setup

- 📊 Shows memory usage for each option

Optimizer Comparison

| Optimizer | Memory | Quality | Best For |

|---|---|---|---|

| AdamW | Very High | 100% | Industry standard, best quality |

| 8-bit Adam ⭐ | Low | 99% | Recommended! 87% less memory, same quality |

| Lion | Medium | 101% | Often better than AdamW, less memory |

| Adafactor | Very Low | 95% | Large models, memory-constrained |

| SGD | Medium | 85-90% | Simple tasks, experimentation |

System automatically selects 8-bit Adam for most setups - it's the perfect balance of memory efficiency and quality!

📋 Requirements

Generate dependencies and check for conflicts

Key Features:

- ✓ PyTorch + Transformers + CUDA compatibility presets

- ✓ Auto-Select preset chooses optimal versions for your GPU

- ✓ Automatic dependency generation (66+ optimized packages)

- ✓ Conflict-free installation with proper ordering

- ✓ Latest, Stable, Older, Legacy, and CPU-only presets available

💡 Tip: Use "Auto-Select (Recommended)" preset for best compatibility. It automatically picks the right PyTorch, Transformers, and CUDA versions for your selected GPU.

🔑 API Keys

Set up authentication for external services

Key Features:

- ✓ HuggingFace token for model access (required for gated models)

- ✓ GitHub token for research repositories (optional, increases rate limits)

- ✓ Eyeball button (👁️) to show/hide tokens securely

- ✓ Test & Save validates tokens before storing

- ✓ Multi-repository support: HuggingFace, GitHub, Papers with Code, PyTorch Hub

💡 Tip: Click the 👁️ button to toggle token visibility while entering. All tokens are encrypted and securely stored. Only HuggingFace token is required for most models.

📦 Build Package

Create complete training packages for deployment

Key Features:

- ✓ Universal platform support (RunPod, Vast.ai, Lambda Labs, AWS, GCP)

- ✓ Package contents preview (requirements.txt, main.py, all.env, setup.sh, README.md)

- ✓ Custom package naming (defaults to project name)

- ✓ One-click package creation

- ✓ Core files streamed from server for updates and flexibility

💡 Tip: After creating your package, download the ZIP and upload it to your preferred GPU provider. Most files (like main.py, requirements.txt) are streamed from our server during bootstrap.sh execution, allowing us to provide updates without re-downloading.

🤗 AI Models

Browse and select from thousands of AI models

Key Features:

- ✓ Browse 100,000+ models from HuggingFace Hub

- ✓ Text, Vision, and Audio models organized by category

- ✓ Repository access status (HuggingFace, GitHub, Papers with Code, PyTorch Hub)

- ✓ Model details: size, type, description

- ✓ "Add to Setup" button instantly configures the selected model

💡 Tip: Filter models by type (Text, Vision, Audio) and use the search bar to find specific models. All models include AI-optimized settings automatically generated for your GPU.

🤖 AI-Powered Dashboard

Revolutionary AI monitoring that sets EzEpoch apart

GPT monitors your training and provides real-time insights

🚀 Revolutionary AI Features:

- 🤖 GPT Training Analysis: AI continuously monitors training progress and identifies issues

- 💡 Smart Recommendations: Real-time suggestions for parameter adjustments

- ⚠️ Predictive Alerts: AI predicts and prevents training failures before they happen

- 📈 Intelligent Optimization: Automatic parameter tuning based on training patterns

- 🎯 Performance Insights: AI explains why certain settings work better

- 🔄 Adaptive Learning: System learns from your training patterns to improve future sessions

🌟 EzEpoch's Competitive Edge: We're the ONLY platform that uses GPT to actively monitor and optimize your training in real-time. While others just show metrics, we provide intelligent insights that save you time, money, and prevent costly failures!

👤 Profile

Manage your account and preferences

Key Features:

- ✓ Personal information and contact details management

- ✓ AI monitoring preferences (email notifications, frequency)

- ✓ Your unique API hash for authentication

- ✓ Active training sessions display

- ✓ Timezone configuration for accurate scheduling

💡 Tip: Your API hash is used for secure authentication across all EzEpoch services. Copy it for use in your training packages or dashboard access.

📖 Complete Settings Dictionary

Comprehensive guide to every parameter and setting

🎯 Training Parameters

⚡ Precision & Optimization

🧠 Optimizers

🎛️ Training Methods

🖥️ GPU & Memory

🤖 AI Monitoring (EzEpoch Exclusive)

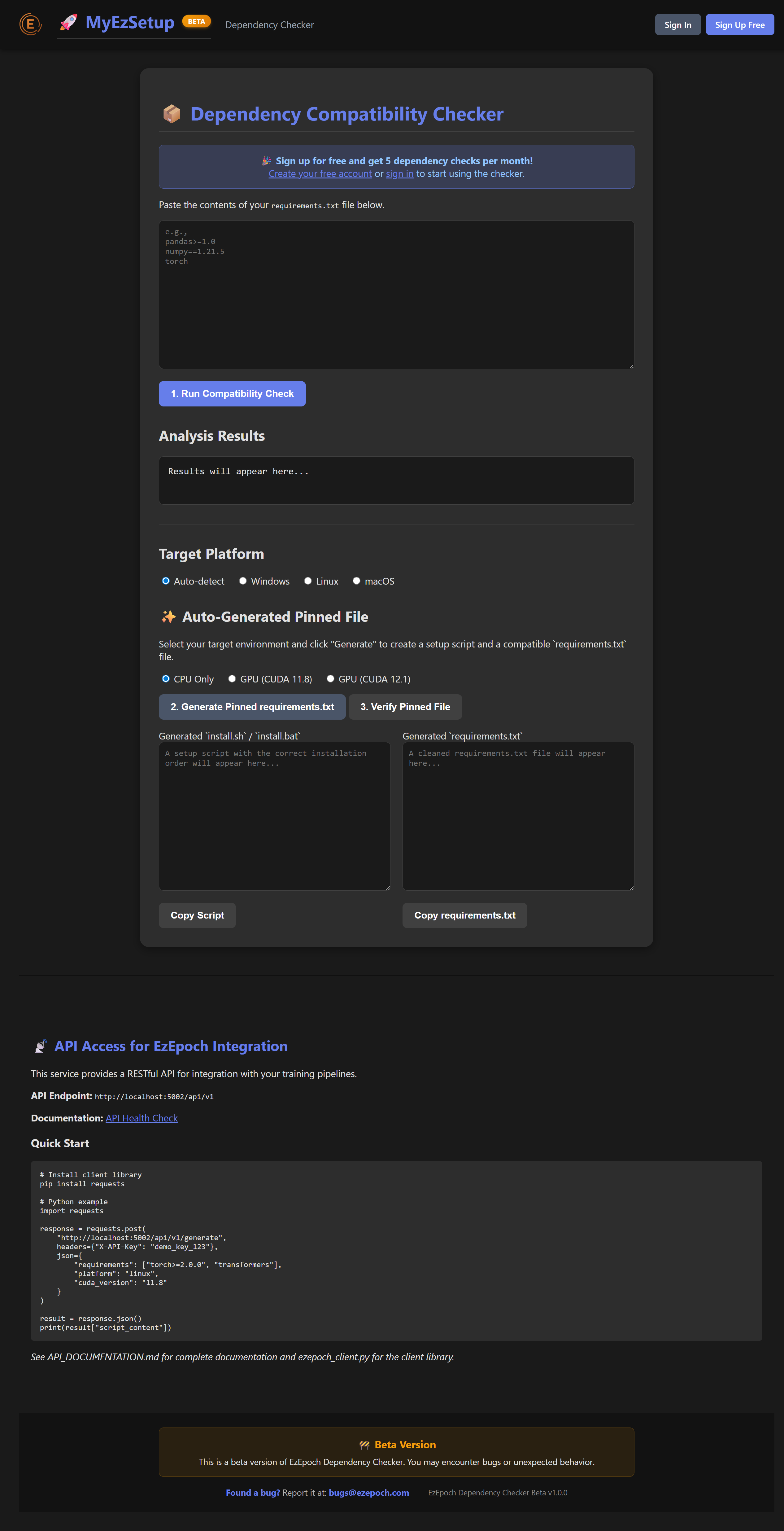

🔧 MyEzSetup - Dependency Checker

Intelligent dependency management and conflict resolution

🎯 Key Features:

- ✓ Dependency Compatibility Checker: Paste your requirements.txt and get instant compatibility analysis

- ✓ Auto-Generated Pinned Files: Creates conflict-free requirements.txt with proper version pinning

- ✓ Installation Scripts: Generates platform-specific setup scripts (install.sh / install.bat)

- ✓ CUDA Support: CPU-only, CUDA 11.8, and CUDA 12.1 configurations

- ✓ Platform Detection: Auto-detects Windows, Linux, macOS requirements

- ✓ API Access: RESTful API for integration with training pipelines

🌐 Access MyEzSetup: Visit www.myezsetup.com or ezsetup.ezepoch.com

💡 How It Works:

- Paste your requirements.txt file

- Run compatibility check to identify conflicts

- Select target platform and CUDA version

- Generate pinned requirements.txt and installation script

- Copy the script and run it on your system

🎉 Free Tier: Sign up for free and get 5 dependency checks per month. Upgrade to MyEzSetup ($4.99/month) or MyEzSetup API ($9.99/month) for unlimited access.